The issue with SCRUM, SAFe, DDD and other methodologies

Today I saw someone on Instagram checking out what SCRUM means - trolling obviously -, and to be honest, it is both hilarious and cringe-worthy (Dutch only):

She starts reading about SCRUM, and then receives a waterfall (pun intended) of terminology like Agile, sprints, daily stand-ups, product owners, backlog and so on… Which makes her head hurt…

Having been in industry for a few decades, I have seen more and more of these concepts emerge: SCRUM, KanBan, SAFe, LESS, but also other approaches like TDD, BDD, DDD and many, many more…

Over the years these very interesting methodologies - which potentially all have a lot of value - tend to emerge in areas where they should not, which leads to a lot of mismatched expectations, wrong assumptions and a lot of frustration, mostly caused by cognitive bias.

Cognitive bias? What is that and how is this related?

Let’s start with the definition of cognitive bias by Wikipedia:

A cognitive bias is a systematic pattern of deviation from norm or rationality in judgment.

Individuals create their own “subjective reality” from their perception of the input. An individual’s construction of reality, not the objective input, may dictate their behavior in the world. Thus, cognitive biases may sometimes lead to perceptual distortion, inaccurate judgment, illogical interpretation, or what is broadly called irrationality.

So, in essence it is about making irrational choices, while you assume that you make a rational choice because you make some wrong assumptions.

Recently, I had my shower and bath tub replaced…

I called one of my friends who does bath room renovations, and the following steps happened:

- He came over to take a look, get some ideas about what we are looking for, expected time-frames etc;

- He redirected us to a couple of his dealers to choose the products we wanted;

- He delivered us the quote for the products;

- He came over to check how much actual days of work he expected;

- He provided me a rough estimate of a couple of days, given not too much unexpected things occurred;

- We confirmed;

- He ordered;

- When everything was ready to ship, we agreed on a precise date;

- He came over and started on the work;

- While some unexpected extra work emerged, he kept me posted on a daily basis;

- Work was delivered and invoiced;

- We paid.

So we basically trusted each other, asked for reasonable ranges and accepted that some risk might have been involved. Which is typically how every project goes…

So what does this have to do with SCRUM?

Nothing, although one could argue that this approach shares a few common patterns. Does my friend know about SCRUM? I expect he does not, and he should not care either.

SCRUM, Agile and all those other methodologies provide a lot of value to the one executing it, but it does not provide any actual value to the end result.

As long as my friend gave me the confidence that he could deliver something reasonable at a reasonable price, within a reasonable time frame, I could not care less whether he was using SCRUM, or Agile, or Waterfall, or whatever the flavor of the month was… And neither did he…

I also did not care which hammer or drill he used either; I assumed he was professional enough to properly handle it.

What’s your point?

Matching expectations in software development is not an easy task per sé, as scope evolves or is partly unknown, priorities shift, unexpected things happen… Involved experts use methodologies like SCRUM, DDD and whatever might be relevant to them, because it is well fit for purpose.

This is where cognitive bias emerges; there are major 3 things happening:

1. Clients are exposed to IT terminology

As far as I am concerned, this is mostly caused by the way IT’ers communicate with their clients: IT’ers speak their own lingo, and clients automatically pick this up.

As a result, clients talk to IT’ers in solution or product space (cloud, databases, screens, …) instead of problem space/their actual business language.

As clients only have an external view of these solutions, they lack the intimate intricacies that lay underneath the surface, so clients might pick the wrong kind of solution/product to start with.

Enterprise architecture makes the explicit distinction between problem space/value proposition, solution design and products, but most clients have a hard time discussing their case at the right level.

This might cause you to boot a project with the wrong solution from the start, actually creating more overhead instead of less.

2. A methodology might be picked for the wrong reasons

Sometimes clients associate a methodology with success rates, thinking it is a silver bullet (i.e. using it will guarantee success in all contexts).

However, there is always a context where a methodology works, and one where it does not work at all.

Most methodologies have both advantages and disadvantages, so the more experience you have with a methodology, the better you can understand when to use which parts, and when to avoid it.

As methodologies usually need to be adapted to their context (cfr. the “No silver bullet”), there is room for interpretation. As less experienced people tend to follow the thought leaders/flavour of the month or even use resumé-driven-development, you might end up with a half-assed implementation that is more of a nuisance than a benefit.

For a simple example, just look at the whole micro-service movement: it’s not because it was successful in some very big or volatile organizations, that it is well suited everywhere. (My advice would be to start with a well-architected mono-repo, and only split up when you have non-functional constraints - whether that’s organizational, quality-attribute-driven or whatever…)

3. Leaky abstractions

As clients are lacking expertise in these areas, they become the victim of leaky abstractions.

A good example would be a progress report:

- If the project has a bigger time-frame, clients typically wants intermediate progress reports, preferable expressed in percent complete and absolute scope/time/quality;

- If you are lucky the client will accept (some percentage of) uncertainty, but most of the time he will want you to include this uncertainty into your calculations, which leads to Hofstadter’s law:

Hofstadter’s Law: It always takes longer than you expect, even when you take into account Hofstadter’s Law;

- As you expose clients to your burndow charts, PI-planning or whatever you are using, the client usually has a highly simplified interpretation of a metric, because truly understanding metrics requires a deep insight and a lot of expertise. A good example of this is the concept of story points: people just convert it into hours, days, not understanding the risk involved;

- As progress advances, metrics change. Most of the time the client fails to accept the volatility part of the metric, so he gets frustrated, resulting in less trust.

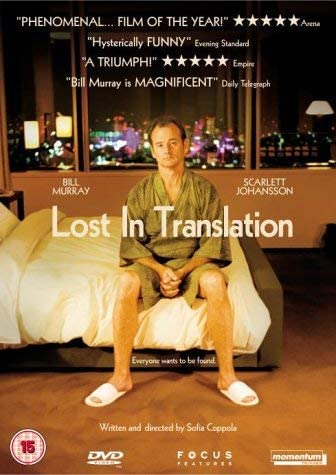

Clients rarely interpret results in the same way you do; there are always little nuances that get lost in translation.

The solution

The solution is tri-fold in my opinion:

1. Avoid tech lingo/solution space when talking to your clients

Try to speak the language of the business. You might think you make an impression by exposing all your knowledge about a certain methodology, but in reality this does not bring the client any closer to the solution. Only share the absolute minimum you cannot avoid.

2. FIT-GAP analysis

Is the methodology actually fit for purpose, or is it driven by something else?

If it is based on success stories, how similar is the current context to the context of the story?

How big is the learning curve of the methodology, and how much experience do you have? Will it require capability development? Does the methodology fit within the current capabilities? (Both for you and/or the client.)

3. Scope and risk management

Progress is hard to measure with a lot of unknowns.

Estimates are even harder. Luckily for existing teams in an evolving context, there are scientific ways to estimate based on Monte-Carlo simulations. For new projects I failed to find a proper technique that’s based on science… (Check one of my previous blog posts about both of these subjects if you are into that kind of stuff.)

Figure out the best way to communicate to the client about your progress. Usually they are bound to a similar framework within their organisation, so adapt and be flexible.

This is more an issue about trust. Take that into account next time you report progress!

Bonus: What I learned managing my own SaaS

For Virtual Sales Lab, a SaaS that provides online product configurators, we tried many different approaches when selling a project.

In our early projects, we took huge losses because we failed to have a proper definition of done etc… In one case we even failed to deliver.

Fast forward to a few years later…

For new clients, we now typically start with 1 to 3 weeks for development, which is enough time to build something that they can actually put on their website. We post our progress on the first or second day, so the client can see how everything evolves, and we are up for the challenge.

After a few days trust is there, and by the end of the agreed term we end up with satisfaction both on the client side and on our side.

The client does not care about the fact that the current front-end architecture is a bit of a mess, or that we track some of our stuff in Excel.

We could use SC(R)UM, KanBan or anything else, but, quite frankly my dear, it does not matter for the client. All he cares about is having a tool on his website that allows people to experiment with different product configurations, and ask for more info.

Wrapping up

Every single time I start with the goal of writing a simple and short blog post, but I end up writing a lot more… I guess estimating things is really hard ;).

I hope this post might help you to consider whether your specific methodologies are fit-for purpose, and exposing them to the client is beneficial or not.

T.